Annual Showcase

Annual Showcase 2022

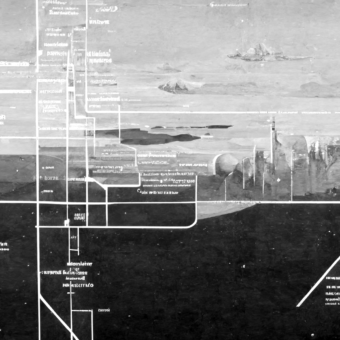

AI and the challenge of speculative Ethics (ESR14)

Future Design PracticesAI imaginaries and their contemporary socio-technical aspects are the focus of this research project. The moral commitments that underlie utopian and dystopian visions with the development of AI are revealed.

Un-Working Health Data (ESR8)

Sustainable Socio-Economic ModelsA deeper understanding of how health data comes into value is needed to persuasively defend any alternative data governance models. This research project traces the flows of value(s) during the health data creation process.

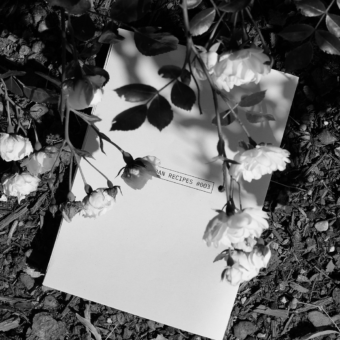

Urban Recipes (ESR4 + ESR12)

Trusted InteractionsUrban explorations are carried out by making and exchanging recipes. This design experiment combines spatial drifting with the recursive practices of recipe-making to facilitate more-than-human commoning.

Plumbing the Machine Learning Pipeline (Prototeam 1)

Future Design PracticesDeveloping machine learning systems requires multidisciplinary teams working across the ML pipeline. In this workshop, participants are invited to act out a fictional ML design scenario and reflect on how values are embedded and lost in industry practices.

Rethinking Digital Consent (DCODE Labs)

Democratic Data Governance- Aniek Kempeneers - TU Delft MSc

Digital platforms rely heavily on harvesting end-user data to provide personalized content. This master thesis offers a design vision of digital consent practices and disclosure interactions as a process rather than a moment.

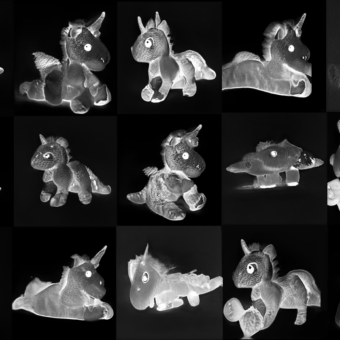

Creating monsters (DCODE Labs)

Future Design Practices- Anne Arzberger - TU Delft MSc

We all have unconscious tendencies to categorise and discriminate. These biases are perpetuated by structures of power baked into the data we feed to algorithms. This master thesis offers a collection of queer ambiguous toys, each illustrating a different kind of reflexive practice and designer-AI collaboration.

Decolonizing AI Histories in Practice (ESR15)

Future Design PracticesWe cannot decolonize our digital futures without confronting the ghosts from our pasts. This research project extends the temporality of the current speculative model to allow for and actively make space for multiplicity in AI design practices.

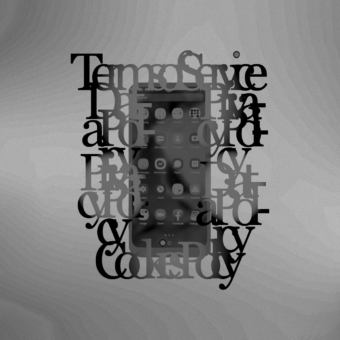

Speculating and experimenting with alternative ToS (ESR12)

Democratic Data GovernanceOngoing and experimental speculative readings of Terms of Service (ToS) serve the purpose to reveal what it represents. By doing so, this research project takes a reflective and transformative alternative route.

Tracing algorithmic imaginaries in everyday life (ESR1)

Inclusive Digital FuturesBy expanding design anthropology through the lens of social learning theories and developing novel methods for an ethnographic study of algorithms, this research project contributes to the more-than-human turn in HCI research and to decentralizing the study of algorithmic impact.

Principled requirements of ML workflows (ESR2)

Inclusive Digital FuturesBy translating ethical guidelines into actual system requirements, this research project focuses on overcoming forms of harmful algorithmic behavior and explores computational strategies for putting ethics into practice in current Machine Learning workflows.

Sensing Care Through Design (Prototeam 2)

Future Design PracticesBy collaborating with experts from machine learning, robotics, nursing studies, and healthcare, this prototeam has designed methods to investigate and envision future interactions between sensing technologies, care receivers, and the care network around them, from the standpoint of the affected.

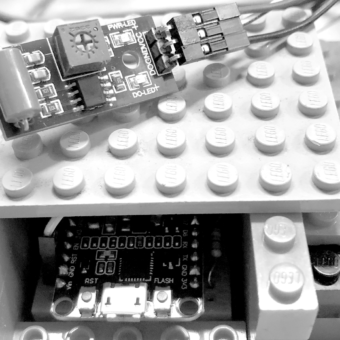

Co-creating data models in-situ (ESR10)

Sustainable Socio-Economic ModelsBy cooperating with people to investigate what is valuable for them to be translated into data from a non-extractive and more communal perspective, this research project supports the co-creation of data models based on local sets that members collect themselves with participatory and speculative DIY sensors.

Data as meaning-making practice in ecological futures (ESR9)

Sustainable Socio-Economic ModelsProfit-driven services and technologies can be problematic when limiting the ability to interpret reality and perhaps reconcile human and more-than-human worlds. An investigation into how small and urban farmers understand data and how they re-interpret data through practices is carried out.

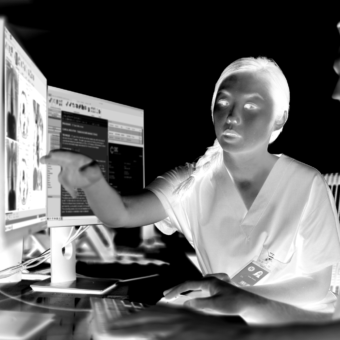

Calibrating trust in clinical AI (ESR7)

Trusted InteractionsUsing high-level, abstract guidelines in practice can be difficult to apply to specific clinical AI projects. A workshop format has been designed and tested at a clinical AI company to help teams apply guidelines to their project.

Contestation Café (ESR6)

Trusted InteractionsContestation Café is a speculative concept based on research into the shared values of contestation and repair. Through the act of writing a manifesto for contestation, the space has manifested itself in the present and is waiting only for the fixers and public to arrive.

Mutant in the Mirror (ESR5)

Trusted InteractionsMutant in the Mirror is an auto-theoretical experiment centered on queerness. It seeks to problematize notions of normativity and naturalization reproduced in and through artificially intelligent systems. It can be read as a gesture of resistance, a means to rethink encoded meaning beyond binary hegemonic, heteronormative classification and categorization.

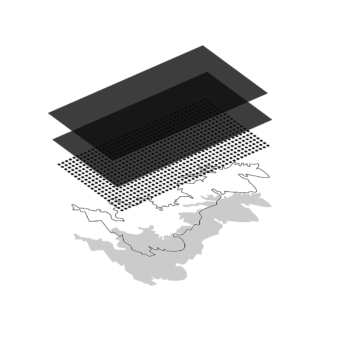

Data Dérive (ESR4)

Trusted InteractionsData Dérive invites participants to wander in the city while interfacing with urban surveillance systems, probing their underlying social relations, and developing new modes of embodied resistance and subversion.

Power differentials and participation in data governance (ESR11)

Democratic Data GovernanceHaving human agency and dignity under the spotlight requires robust measures of governance, security, and accountability. This research project explores how participatory design can contribute to envisioning democratic models of governance within data-driven systems.

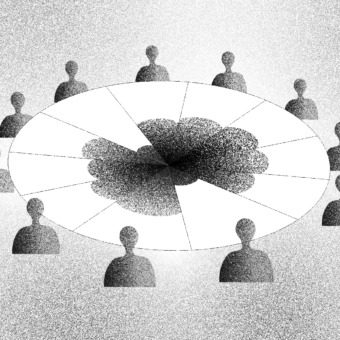

UNCOMMON CROWD (Prototeam 3)

Future Design PracticesThe use of sensing technologies raises complex tensions and issues in the city. In this workshop, participants engage with auto-ethnographic and fictional modes of design inquiry to explore and prototype commons-based, open, and distributed modes of sensing in the wild.